In the course of its current effort to collect a “big data” set of newspaper and magazine articles, government documents, and other public discourse on the humanities for study through computational text analysis, the 4Humanities WhatEvery1Says research project acted on an intriguing idea by team member Professor Jeremy Douglass (UC Santa Barbara) to extend its idea of public discourse.

The project team collected a plain-text corpus of all U.S. patents related to the humanities issued since 1976 (the first year of full digital text available in the U. S. Patent Office’s searchable archive). Alan Liu scraped the patent descriptions as plain text (to facilitate study through computational text-analysis) and evaluated them as either “Humanities Patents” (mentioning “humanities” or “liberal arts” in a notable way) or “Humanities Patents–Extended Set” (mentioning “humanities” or “liberal arts” in a peripheral manner or in citations). Because patent descriptions are in the public domain, 4Humanities can present them here as folders of plain text files downloadable in zip form. (Missing are diagrams and other visuals.)

Much of the leading-edge scholarly response to public discussion about the apparent decline of the humanities has revolved around translating the older notion of “general” and (more recently) “flexible” humanities knowledge into such terms relevant to current socioeconomic change as “open access” and “collaboration.” Normally, these concepts are seen through the lens of copyright issues. Little attention has been paid to patents as part of the modernization of the humanities.

Gutenberg did not have a patent on print. However, thinking about the humanities in society today, researchers may need to evaluate critically how the humanities should adapt to the fact that he didn’t.

- Metadata (Excel spreadsheet)

- Humanities Patents (76 patents related to humanities or liberal arts) (zip file)

- Humanities Patents – Extended Set (336 additional patents that mention the humanities or liberal arts in a peripheral way–e.g., only in reference citations and institutional names of patent holders, as minor or arbitrary examples, etc.) (zip file)

- Humanities Patents – Total Set (412 patents; combined set of above “Humanities Patents” and “Humanities Patents – Extended Set”) (zip file)

Topic Models of This Data Set

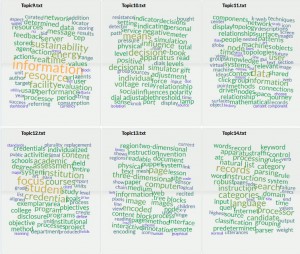

4Humanities has created initial topic models of the Humanities Patents (spreadsheet of 30-topic model) and the Humanities Patents – Total Set (spreadsheet of 30-topic model). The topic models seem to be highly legible (perhaps owing to the tightly constrained form of patent descriptions). In essence, each topic in the models is a kind of doorway through which the humanities are invited into the highly technical, formalized, monetized world of late modernity as envisioned in patents. From the point of view of patents as a form of knowledge, the main doorways today can be labeled such things as “information,” “documents,” “education,” “search,” etc. –not such older humanistic portals as “philosophy,” “literature,” “history,” and so on.

Topics visualized as word clouds (full set of word clouds):

- Word clouds of Humanities Patents topic model (30 topics)

- Word clouds of Humanities Patents – Total Set topic model (30 topics)

Topic Modeling Details

Topic models were created using Mallet version 2.0.8RC2 on a scrubbed version of the patent descriptions to which an extra stopwords list (additional to the default Mallet stopwords) was applied.

- Humanities Patents (scrubbed files)

- Humanities Patents – Extended Set (scrubbed files)

- Humanities Patents – Total Set (scrubbed files)

- extra_stopwords.txt

Preparation of scrubbed texts and extra stopwords was facilitated through preliminary text analysis of the patents. We processed our total set of patents with the Antconc linguistic corpus analysis tool to create a word list by frequency and also a list of 3-grams terminating in “humanities.” Used in conjunction with Antconc’s concordance tool (to assess the context of words), these allowed us to identify words to add to the extra stopwords list and also to a Python script that (minimally) scrubbed the texts to standardize some words (e.g., “students,” “student’s,” “student”; “texts,” “text”) and to consolidate some phrases (e.g., “U.S. > u_s; “liberal arts” > liberal_arts).

Word cloud visualizations of topics were created using the Lexos online text processing platform, whose Visualize > Multicloud tool includes an option for “Topic Clouds” that can be fed the topic-counts file produced when Mallet is used with the –word-topic-counts-file option.